|

|

|

Index

Introduction

History

Basic Features

More Features

Distributed

Depth Mapping

Ontologic Scene Graph

Basic Implementations

Compositor Shells

Introduction

OntoGraphics is the underlying multidimensional graphics system of the OntoCOVE and OntoScope components, but most of the basic parts can also be used with every other Linux kernel based operating system.

This webpage summarizes all informations given so far in the past about OntoGraphics.

History

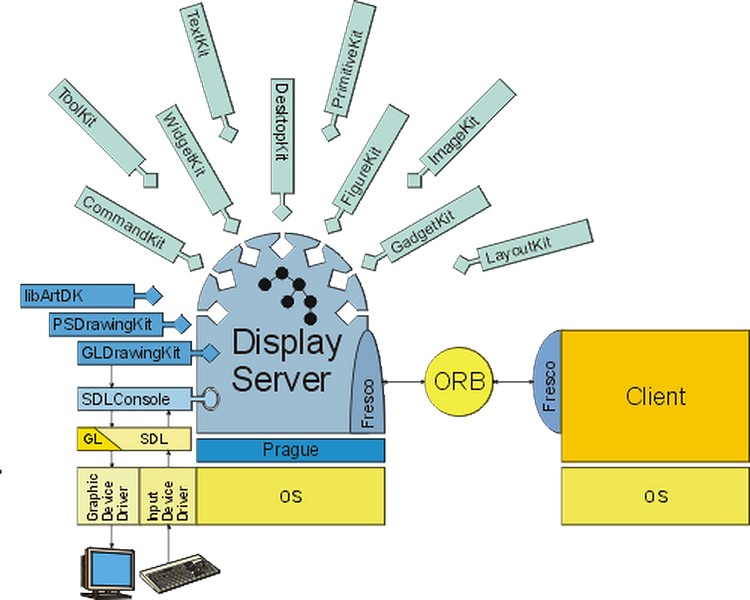

"The Fresco display server consists of a large data structure called a scene graph in which an abstract representation of the image you see on the screen is stored. The scene graph is constructed at runtime by client processes connecting to the display server and asking for new objects to be built and attached to the graph. Clients make such requests through a distributed object technology called [Common Object Request Broker Architecture (]CORBA[)]. Once objects are attached to the scene graph, a user interacts directly with them in the display server process, occasionally sending updates back to client processes to inform them of some change they might be interested in."

Metisse is the second prototype of a toolkit for exploring new window management techniques that combines Ametista based on the graphical desktop sharing system Virtual Network Computing (VNC) with the F Virtual Window Manager (FVWM) and a slighltly modified version of the X.Org Server. "[It] is constituted by a virtual X server called Xwnc, a special version of FVWM and an FVWM module FvwmAmetista (after Ametista). [...] Xwnc is a mix of Xvnc (Unix VNC server) and XDarwin", connected with CORBA when used together with the GNU's Not Unix! (GNU) Network Object Model Environment (GNOME) by GNOME's underlying component model, and included in the Mekensleep Underware software library set.

Ametista and Metissa are based on the Nucleo library.

We substituted, transformed, and integrated Fresco as well as Nucleo, Ametista, and Metisse with the rest of Underware and the Virtual Object System (VOS) to our OntoGraphics, OntoCOVE, and OntoScope components as well as the OntoNet component with our Ontologic Request Brokers by our overall Ontologic System Architecture (OSA).

At the end of the year 2005, we designed in relation to the integration of the X Window System/X11 and OpenGL, and to desktop environment frameworks ...

Further considerations are to put the whole OntoScope running on this display server as well, and if this should be done with or without using the upcoming XWayland.

Familiar in relation to what we designed is the display server framework Wayland.

At the end of the year 2005 or earlier when software layers like the desktop environment Metisse and the compositing 3D window man- ager Compiz based on the Open Graphics Library (OpenGL) and the integration of the X.Org Server or X Server with its computer soft- ware system and network protocol X Window System/X11 with the OpenGL called XGL, GLX, and Accelerated Indirect GLX (AIGLX) that replaced GLX have been discussed heavily in conjunciton with the Direct Rendering Infrastructure (DRI), we thought about a different approach based on a newly structured software architecture and stack. In the last years 50% of this new architecture has been implemented with the compositor protocol respectively display server framework Wayland, which simply said integrates the display manager with the compositor of the X Window System, but obmits the (interprocess) communication and network protocols (see also the OntoLab and OntoLinux Further steps of the 24th of October 2012 (section History)). The latter can still be used by running the X Server on top of Wayland. Our architecture goes even further and does also the other 50%, which is the communication and network part of the X Server basically in the same way or said otherwise on the same level as Wayland with direct access to the operating system kernel space and the hardware. But we do not stop here and so we also designed a general architecture with some kind of a module or plug-in system, which makes it possible to use different protocols, like the graphical desktop sharing system Virtual Network Computing (VNC), the general object-oriented method call system Commen Object Request Broker Arichtecture (CORBA), Virtual Object System (VOS), the message bus systems for InterProcess Communication (IPC) D-Bus and Remote procedure call (RPC) respectively Remote Methode Invocation (RMI), and generally every other of such local and distributed communication protocols and messaging systems.

For implementing a quick desktop prototyp we also looked closer on the display server protocol Wayland and the game builder OpenBlox, which has a built-in physics engine and Lego-like building blocks (see also the intelliTablet Announcement One Tablet Per Child (OTPC) and One Pad Per Child (OPPC/OP²C) #1 of the 17th of July 2012 for example), though we came to the opinion that the missing parts are exactly the ones that we have to implement for our OntoScope anyway, so that in the end it made no sense to waste time for this demonstrator. Said this, we will focus on more interesting options, which are for example the combination of Wayland with the 3D content creation suite Blender, the OpenSceneGraph (OSG) library, the Resource Description Framework (RDF), and ....

Basic Features

The OntoGraphics has the following basic features, which were pub- licated before on the webpage of OntoCOVE:

- It is fast, because it is based on a consistent and highly opti- mized 3D technology metaphor that includes the hardware and the software.

- Its software part of the consistent 3D technology metaphor establishes, that 2D window systems are embedded in a 3D environment and not vice versa.

- It is based on the leading 3D graphics software library.

- It is based on the leading 3D scene graph software library.

- It has a large collection of extensions.

- It can be customized easily.

- It is compatible with the leading 3D applications of all kinds and fields.

More Features

Actually, the three game and simulation engines

are included in the OntoScope component. What we have not publicated so far are the informations that we are looking for a more specialized graphics engine as well since years before the start of OntoLix and OntoLinux, which we want to integrate with the other game and simulation engines.

In this relation it is also interesting to note, that Delta3D is using a Binary Space Partitioning (BSP) compiler in conjunction with the bounding sphere technique of the 3D graphics Application Programming Interface (API) OpenSceneGraph (OSG), though we surely want to use other techniques like the ones based on the Sparse Voxel Octree (SVO) and the Blender developers and supporters are discussing about the future of the BGE, and we are looking at the game engines of the id Tech series, specifically with the id Tech 4 (used for Doom 3 and Quake 4) modified with elements of the id Tech 5 (used for Rage), which is used for the Doom 3 BFG Edition (see also the related Further steps of the 24th of October 2012 (section History)).

We also found a way to get point clouds into the OpenSceneGraph (OSG) and are looking if and how we can connect it with the Visual- ization ToolKit (VTK) and the other related libraries of fields like for example the

- Finite Element Method (FEM) technique,

- surface reconstruction by Poisson's equations, and

- Fast Fourier Transform (FFT) algorithms.

Please, keep in mind that we do not want to support scripting lang- uages based on the EcmaScript standard any longer and the pro- gramming language Python anymore in the first place, if at all, in favour of using C++ as the command language, the scripting or macro language, and the programming language with the Cling C++ interpreter (see the related Further steps of the 24th of February 2014).

We continued our work related with the foundational 3D environment of our systems (see also the Further steps of the 11th of March 2014 (section More Features)) and made substantial progress:

- 1. We do not need to look if and how we can connect the OSG with the Visualization ToolKit (VTK) anymore, because OSG already offers a basic NodeKit for volume rendering with 3D textures, Level of Detail (LOD), inclusive out-of-core respectively paged versions, and so on. A little work is needed for integrating the functionality of the Insight ToolKit (ITK) as well, though basic support in OSG exists already.

- 2. We found interesting solutions that add ray casting and ray tracing techniques in different static and dynamic/real-time variants to OSG. Advantageously, the OSG API already implements the K-dim- ensional tree (KdTree) space partitioning datastructure internally and the octree data structure is not a problem at all.

- 3. We also found an OSG NodeKit that provides post processing and General-Purpose computing on Graphics Processing Unit (GPGPU) functionality.

- 4. We have also descriptions for implementing efficient Sparse Voxel Octree (SVO) rendering techniques including variants that use the ray marching technique for example, for which we can use the OSG NodeKits and the techniques implemented with OSG mentioned in the points 1., 2. and 3.. Maybe OntoLix and OntoLinux even get a 3D user environment and desktop with SVO.

Further steps are focused on the following points:

- 5. Most potentially, we will use the support by the Simple Direct- Media Layer (SDL) development library for the compositor protocol Wayland (SDL-Wayland) to create the related contexts (see also the related point 6.).

- 6. At least for backward compatibility with legacy applications some kind of a compositing window manager is needed, like Compiz, KWin or Clayland. In the case of KWin and Clayland we already have Way- land support, as it is the case with the Qt and Clutter application frameworks. Interestingly, KWin 5 uses QtQuick 2 and in this way pulls in the QOpenGL module of QtGui, so that using QtWayland with QtCompositor would be straightforward. In this conjunction, we are looking if it is the right way to nest for example a Compiz-Wayland session compositor in an SDL-Wayland session compositor.

- 7. We have to decide if our way to integrate the 3D content creat- ion suite Blender with its Blender Game Engine (BGE) and the Open CasCade Technology (OCCT) is elegant enough.

- 8. As we already said in the Further steps of the 11th of March 2014 (this section), we are looking at an integration of a further graphics engine, like the Object-oriented Graphics Rendering Engine (OGRE) 3D or the id Tech 4 engine with elements of the id Tech 5 engine. This would be done in the same way as the integration of the other technologies (see also points 7. and 9.).

- 9. We are also working again on our old concept of a context man- ager, which should not be confused with the already existing scene and context managers, because it integrates different (OpenGL bas- ed) engines in our special way, like the ones mentioned in the points 6., 7. and 8. into OSG besides the possibility to integrate Qt.

- 10. We are not quite sure if a OSG-Wayland compositor truly makes sense as a substitute for SDL-Wayland, but at least it is an interest- ing concept to think about.

Distributed

We have re-designed the software architecture of the remote 3D display toolkit VirtualGL (VGL) in three different ways:

- The first way is a design of a hybrid that uses both the old approach of indirect rendering, which sents OpenGL commands and 3D data from the server to the client(s), and the newer approach of direct rendering on the server and sending the rendered 2D images to the client only, as it is done with VGL. The reason for this design is that some tasks related with OpenGL-based rendering could be done on the client side. To support and automate the decision process about what should be rendered either on the server side or the client side a binary network partitioning technique could be applied.

- The second way is a design of another hybrid that consists of the In-Process GLX Forking with an X Proxy (e.g. Virtual Network Comp- uting (VNC)) and the In-Process GLX Forking with Image Encoding respectively VGL Transport, that abstracts the X Proxy with its X11 commands and X11 events in the same way, as it was done with GLX Forking, while still using the VGL Transport. In the case of VNC this can be done with VNC Forking and a related VNC interposer, so that in this way VGL Transport does support collaboration (multiple clients per session) on the side of the server without using a se- parate screen scrapper on the sides of the clients.

- The third way is a straightforward further development of the sec- ond way and focused on the exchange of the X Server, the GLX interposer respectively VGL and the X Proxy interposer with a comp- ositor, which is based on the protocol respectively display server framework Wayland, and a communication framework, which could be based on the Linux kernel-based D-Bus (kdbus) with the systemd- based D-Bus (sdbus), ZeroMQ, or nanomsg (see also the related Further steps of the 31st of January 2013 (2nd paragraph of section History).

Depth Mapping

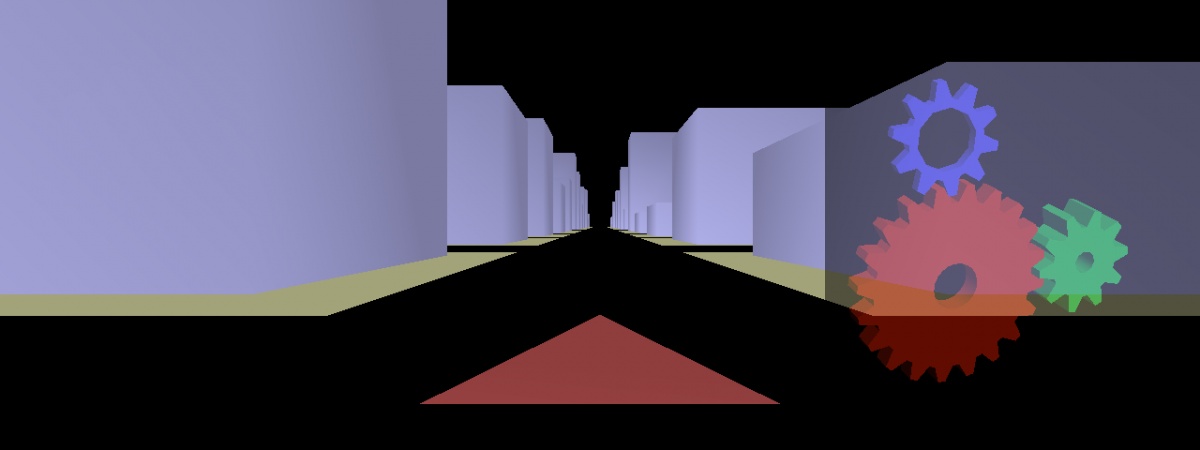

We continued to improve our OpenSceneGraph based architecture with depth mapping, that is analyzing a picture or video stream to figure out the distance of the surfaces of scene objects from the viewpoint respectively the camera. One approach to realize depth mapping is to re-use the depth buffer for shadow mapping, depth buffer to distance conversion, as well as mouse picking and ray tracing techniques in conjunction with for example the function gluUnProject(), which converts screen coordinates to OpenGL co- ordinates, and machine vision.

In this way we can easily add completely software based gesture tracking and control even with common 2D cameras besides special 3D and depth cameras to the User Interface (UI; see also the web- page Ontologic Applications and our original and unique MobileKinetic technology).

Ontologic Scene Graph

Besides the modeler and game engine of the OntoBlender component, that is based on the OpenGL software library, is its node editor that uses a system of graphical elements and widgets as nodes, that became the so-called (onto)logic bricks after the start of OntoLi+-x, which are a combination of sensors, controllers, and actuators, and that are connected in the related node editor to control the movement and display of objects in the engine

semantic respectively ontologic scene graph

Basic Implementations

We already have:

1. OpenSceneGraph (OSG) examples with utility libraries and frame- works like the OpenGL Utility Toolkit (GLUT; osgviewerGLUT), Simple DirectMedia Layer (SDL; osgviewerSDL), Qt application framework (osgviewerQt), and others,

2. the base class osgViewer::GraphicsWindow and the derived class osgViewer::GraphicsWindowX11 with bindings to EGL and OpenGL ES, and

3. Wayland compositors with SDL, and Qt and KWin.

As further steps, we are looking at the following points:

4. Implement the class osgViewer::GraphicsWindowWayland by using the Weston client sample simple-egl.c.

5. Implement OSG-Wayland/OSGLand 1.0 by simply merging the al- ready available solutions mentioned in the points 1. to 4. and delet- ing obsolete code in an appropriate way (see also the Further steps of the 22nd of March 2014).

6. Implement Wayland compositors with Simple and Fast Multimedia Library (SFML) and OpenGL FrameWork (GLFW) following the solut- ions named in point 3..

7. Develop OSGLand 1.0 further through the support of the OpenGL Shading Language (GLSL; osg::Shader, osg::Program, osg::Uniform), specifically in relation with its handling of pixels, framebuffer and texture memory, and features like e.g. to draw in one pass without render-to-texture (see OpenGL pipeline architecture, points 8. and 10., and wherever it fits) and optimize the resulting OSGLand 2.0.

8. Optimize more with techniques like Sparse Bindless Texture Array (SBTA), Multi-Draw Indirect (MDI), dynamic streaming, and so on if not available already and if possible in this way (see again point 6. and also the Further steps of 17th of March 2014 (section More Features)).

9. Use OSG features like osgViewer::CompositeViewer, osgWidget, and others if advantageously.

10. Implement communication frameworks (see the Further steps of the 14th of March 2014 (section Distributed)).

Compositor Shells

Based on the general architecture, we develop specialized composit- or shells on top of the basic work of highly skilled third parties and us.

In this way we take consumer devices and applications of different areas to a new frontier, which do not depend on a full-fledged window management and 3D environment, but need the most efficient way for presenting 2D and 3D multimedia contents.

Indeed, the Wearland, Sportsland, Homeland, and Healthland shells are derived from a Weston shell for In-Vehicle Infotainment (IVI) systems and hence have the same high industrial quality features as their companion shell used in the field of automotive computing.

©© BY-SA 4.0 Genivi and OntoLab

As their labels easily suggest, the compositor shells constitute the ideal Graphical User Interface (GUI) platforms for embedded systems in the fields of wearable computers and iRaiments, home appliances, automation, health care and fitness, as well as mobility:

- Wearland,

- Sportsland,

- Homeland,

- Healthland, and

- Bizland.

Wearland

The Wearland compositor shell is the perfect basis of a Graphical User Interface (GUI) platform for:

- wearable computers like e.g.

- digital feature watches,

- connected watches,

- smartwatches, and

- smarteyewear,

- small mobile gadgets, and

- related applications.

Sportsland

The Sportsland compositor shell is a more specialized variant of the Wearland compositor shell, uses functions of the Healthland compo- sitor shell, and provides a tried, tested, and already used basis of a GUI platform for all kinds of:

- wearable sport computers like e.g.

- activity trackers,

- smart bands,

- digital feature sport watches,

- connected sport watches,

- smart sport eyewear, and

- smart sport wear,

- sports related applications.

Homeland

The Homeland compositor shell is designed as a foundation of a GUI platform for:

- home appliances like e.g.

- coffee machines,

- washing machines, and

- so on,

- consumer electronics like e.g.

- clocks,

- radios, and

- televisions,

- home automata, and

- related applications.

Healthland

The Healthland compositor shell offers an already industry proven, specialized foundation of a GUI platform for devices and applications related with:

- personal care,

- fitness, and

- health care.

Bizland

The Bizland compositor shell is another specialized variant of the Wearland compositor shell and provides an already tried, tested, and used specialized foundation of a GUI platform for all kinds of:

- wearable computers like e.g.

- digital feature watches,

- connected watches,

- smartwatches,

- smarteyewear, and

- smartgloves

- that support applications related with professional tasks in areas such as e.g.:

- Enterprise Resource Planning (ERP),

- marketing and sale,

- assembly and production,

- repair,

- maintenance, and

- servicing,

- logistics and shipping,

- finance,

- payment, and

- so on.

Compositors

In contrast to the shells the compositors:

- IVILand,

- KinderLand, and

- OSGLand

provide the complete set and functionality of a 3D environment.

IVI-Wayland (IVILand)

For In-Vehicle Infotainment (IVI) systems we also use our OSGLand compositor.

Kinder-Wayland (KinderLand)

For our Little Nerds™ we use our OSGLand compositor for our Graphical User Interface (GUI) based on our:

- cubic approach,

- block-based open world game respectively block-building sandbox game,

- general Brick (desktop) environment,

- Lego Brick® Theme, and

- Ontocraft without the typical Lego® brick knobs,

that we developed for our learning environments SugarFox and Salt and Pepper, and the devices specifically desiged for children, like e.g.

- smartwatches,

- tablets, and

- laptops.

OpenSceneGraph-Wayland Compositor (OSGLand)

We were reminded about the concepts of the scene graph and its surface nodes of the Wayland compositor Weston. In fact, we are already at the OSG-Wayland compositor, which is called OSGLand from now on, but this point of view on the weston_view structure opens up a new view on the OSGLand as well, because putting the OpenGL ES based variant of OSG so deep into the compositor is a very smart alternative that was not envisioned by us so far (see again the points 5. to 10. of the Further steps of the 17th of March 2014 (section More Features)).

More summarising will come with one of the next Further steps after we have sorted out the old and also the new variants.

We watched and analyzed the whole situation very thoroughly for several years and finally came to the following decision about our general software stack (simplified illustration):

6. Applications

5. Window managers, Graphical User Interfaces (GUIs), multimedia and application frameworks, game engines

4. Wayland with OpenSceneGraph (OSGLand) or/and similar and other direct media compositors

3. EGL, OpenGL and OpenGL for Embedded Systems (OpenGL ES); EGL and OpenGL ES might be merged into OpenGL; OpenVG is al- ready implemented on top of OpenGL (ES)

2. Operating systems and (Open Graphics Library (OpenGL)) hard- ware (Direct Rendering Infrastructure (DRI)) drivers

1. Hardware Abstraction Layer (HAL)

In this way every framework gets a well thought and flexible found- ation upon they can build, as it is given for example with our comp- ositor OSGLand as well as our OntoCove component with its signal/ slot system, that already exists since the year 2006 (see also the Further steps of the 17th (section More Features) and 20th (beginning of this section) of March 2014).

Thanks to the effort by a highly skilled developer of the protocol re- spectively display server framework Wayland, we have now a general OpenGL shader generator for "all valid combinations of different in- put, conversion and output pipelines, which can easily be extended with more if desired", that we can directly use or at least re-use as a blueprint for our OpenSceneGraph-Wayland (OSGLand) compositor.

Zooming Text-Entry Interface

Dasher is an information-efficient predictive text-entry interface with word-completion, based on the Zooming User Interface (ZUI) paradigm and driven by continuous tracking of natural inputs by a user. Dasher is a competitive text-entry system wherever a full-size keyboard cannot be used, for example,

- when operating a computer one-handed, by joystick, touchscreen, trackball, or mouse,

- when operating a computer with zero hands (i.e. by head-mouse or eye-tracker),

- on a hand-held mobile device,

- on a wearable computer together with Wearland

by using our original MobileKinetic™/MobileKinect technology.

In addition, the variant Speech Dasher allows to create efficient Multimodal Multimedia User Interfaces™ (M²UIs™) for:

- continuous gesture recognition through tracking of the hand, eye, gaze, and further body parts of a user, and

- more Kinetic User Interface (KUI) techniques through device-tracking like tilting,

- speech input and

- language models.

"The eye tracking version of Dasher allows an experienced user to write text as fast as normal handwriting."

We developed Dasher further to make it even more efficient.